TL;DR

- Google Search Console now allows daily bulk imports into Google BigQuery.

- Google BigQuery is an accelerator for Google Looker Studio (faster querying & reporting!).

- Here is a walkthrough on how to set this up + create a Google Looker Template to report your data.

It’s finally here, the bulk export from Google Search Console to BigQuery (and eventually Google Looker Studio).

Hopefully, you already have experience in at least 2 of these tools (GSC & Looker), but if not there will be plenty of resources and steps that anyone should be able to complete this process.

This article will assume you have an active BigQuery & Google Search Console account. If you do not follow these articles to get them set up.

Google Search Console How-To Guides

Google Looker Studio Beginners Guide

Google’s guide below does a great job explaining the steps to get the bulk setup going so I won’t bother diving into that. In this post, I’ll cover the quick steps to take it to the next level. I recommend checking out the screenshots when reading the directions, hopefully, it makes it a lot clearer. If not please reach out to me on my socials to let me know!

Google Search Console Bulk Export Video Walkthrough

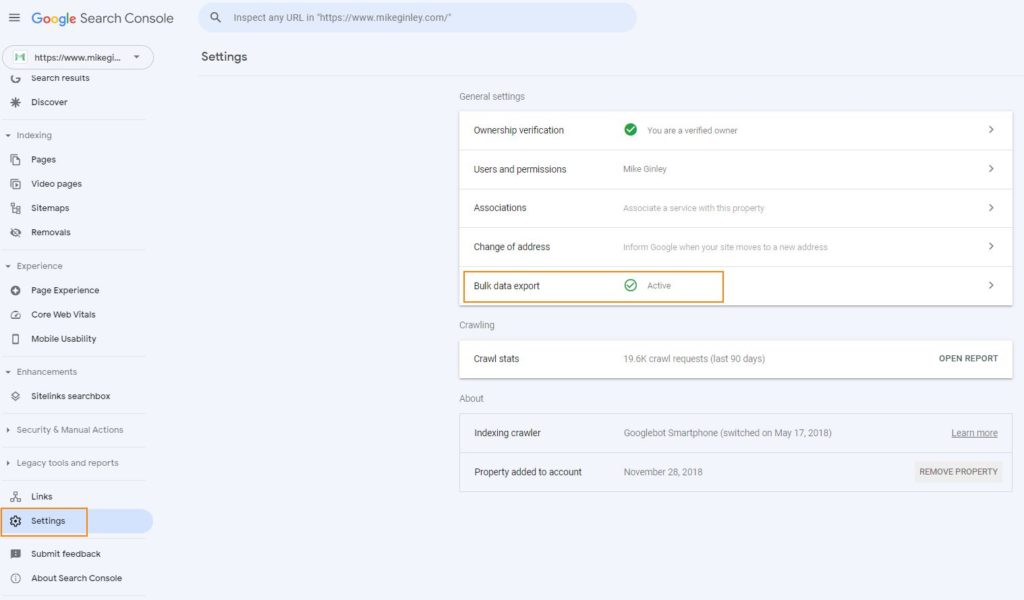

Step 1: Inside Google Search Console

- Go to your Google Search Console profile Settings in the bottom left.

- Bulk data export should be an option.

- Instructions on how to start a new bulk data export.

- The directions in the link above + the images below should be enough to walk you through.

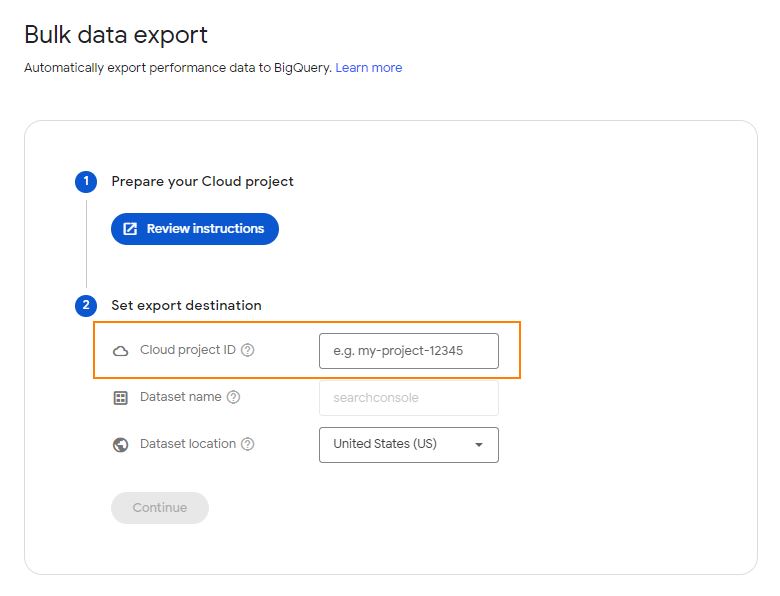

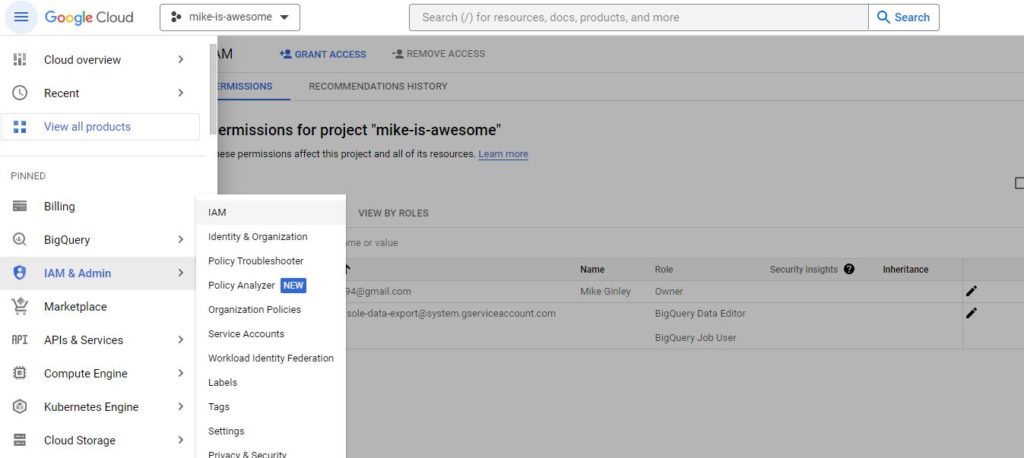

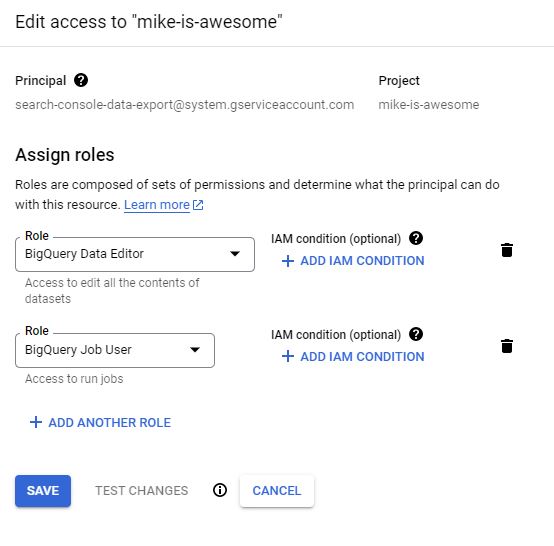

- Essentially you need to give GSC your BigQuery ProjectID (mine is mike-is-awesome) & give BigQuery some Search Console permissions (BQ Data Editor & BQ Job User).

- After this, you will get a notice that it’ll take up to 48 hours for the table to start populating (mine was done the next morning).

- If you don’t set something up correctly here Google will not let you save it so there shouldn’t be any issues you need to worry about.

- Recheck the setup steps and try saving again if Google stops you from saving.

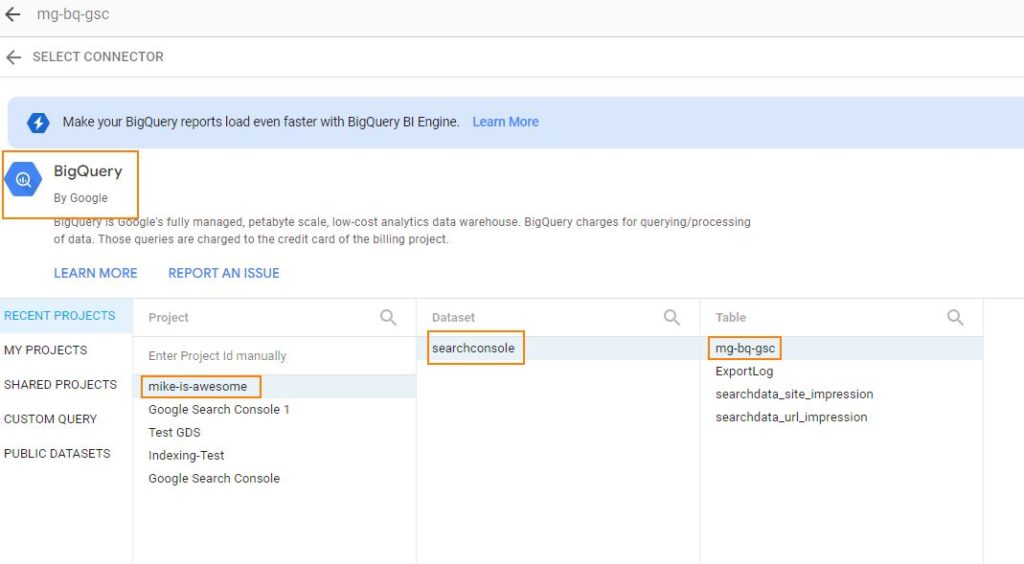

Step 2: Over to BigQuery

Check the next morning in BigQuery after you set this up to see if the table has started to populate with data. If it hasn’t, ensure that Google Search Console Bulk Export shows as Active.

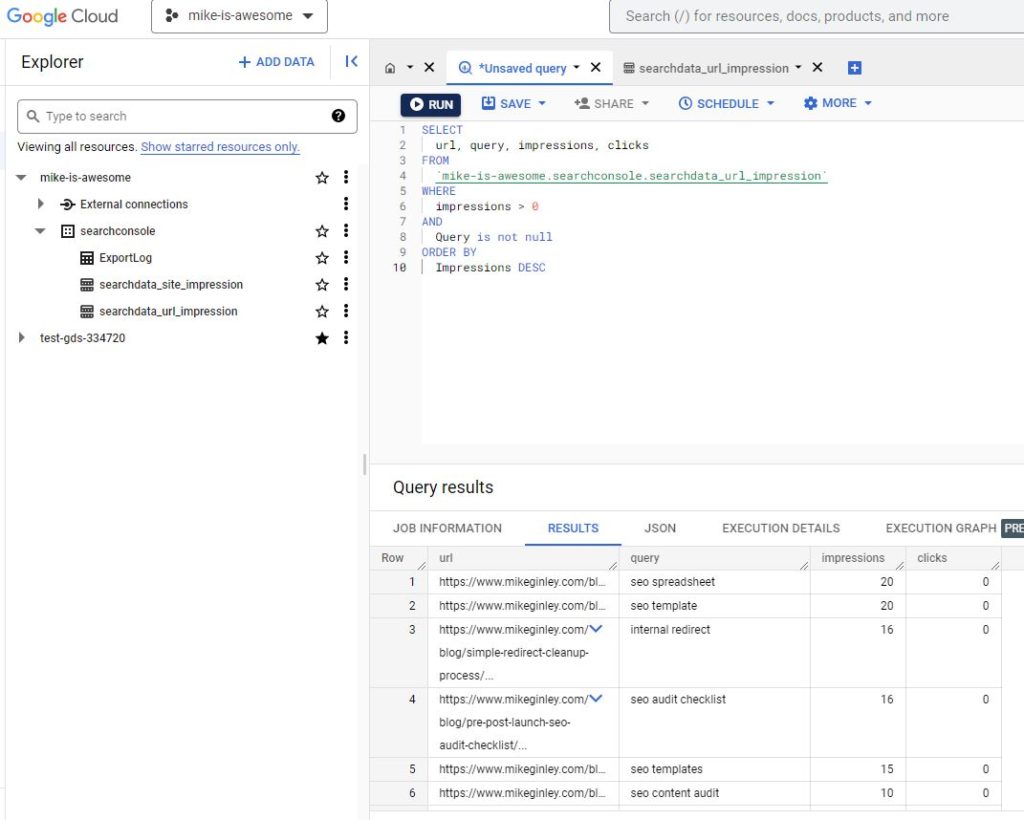

- In the side nav -> BigQuery -> SQL Workspace.

- Find your project, expand it and look for the ‘search console dataset‘.

- Then you will see 2 tables (URL & Site) – More info on URL vs. Site data.

- From there you can start querying data in the SQL workspace.

- If you are comfortable with SQL definitely try it out, if not there are some resources below that can get you started.

- This is mainly to test that the data is properly flowing into the BQ table, I prefer reporting through Looker Studio, but you can absolutely report through here. There is a ton SEOs can do with this new functionality!

- There will be some queries as ‘null’ due to Anonymized queries, I just filter these out in BigQuery and my Looker Studio Template.

Simple SQL query to test your data:

SELECT

query, url, impressions, clicks

FROM

`INSERTPROJECTID.searchconsole.searchdata_url_impression`

Where

query is not null

Order By

Impressions DESC

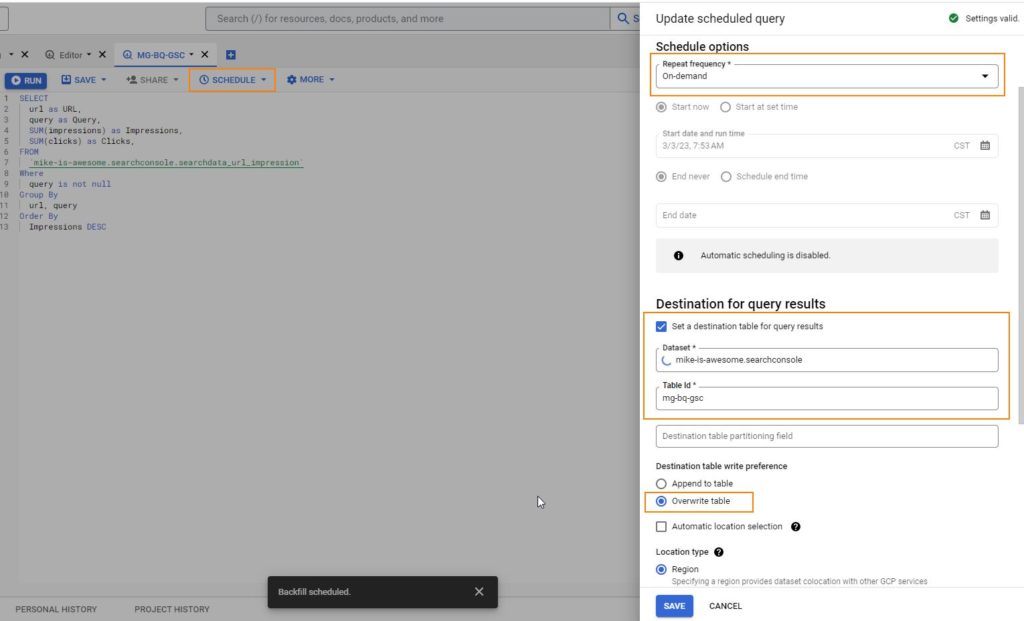

Step 3: New Table In BigQuery

Since this is a daily import you need to aggregate the data and store it in a table that you can connect to Google Looker Studio.

SELECT

url as URL,

query as Query,

SUM(sum_position)/ SUM(impressions) + 1 as Position,

SUM(impressions) as Impressions,

SUM(clicks) as Clicks,

FROM

`INSERTPROJECTID.searchconsole.searchdata_url_impression`

Where

Where

query is not null

AND sum_position < 25

Group By

url, query

Order By

Impressions DESC- In the BigQuery SQL editor, use the code from above with your table referenced.

- Run the Query to verify accuracy.

- Click the Schedule button at the top of the editor and then Create a New Scheduled Query. (See my setup below).

- Choose how frequently you want it to run (I chose on demand just to be in more control) but the beauty is you can fully automate this and have it set to daily!

- Set a Destination Table

- Choose your Datasset (ID we have been working with).

- Create a TableID (name it whatever, we’ll need this for Looker Studio).

- Choose to Overwrite Table

- Save

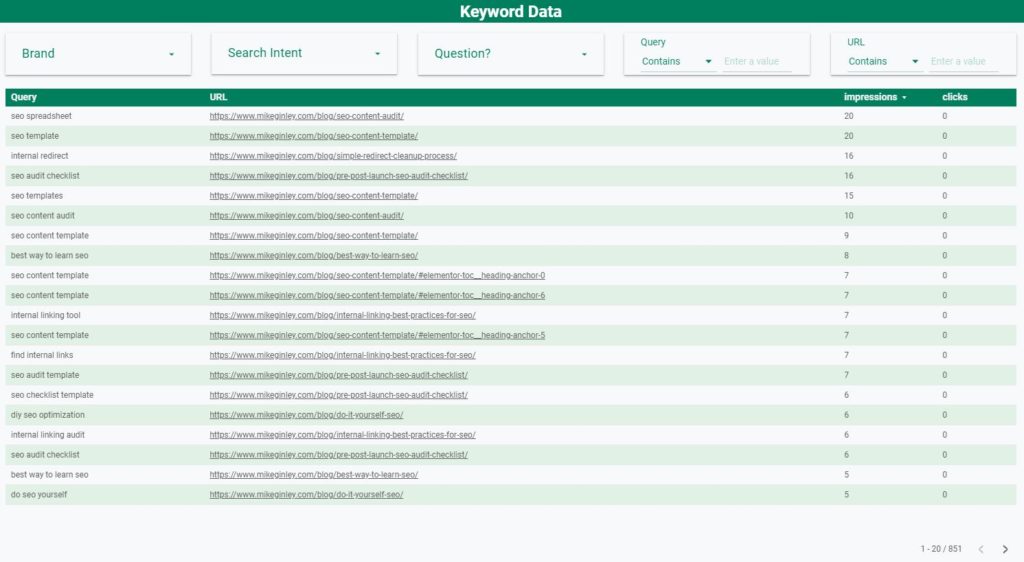

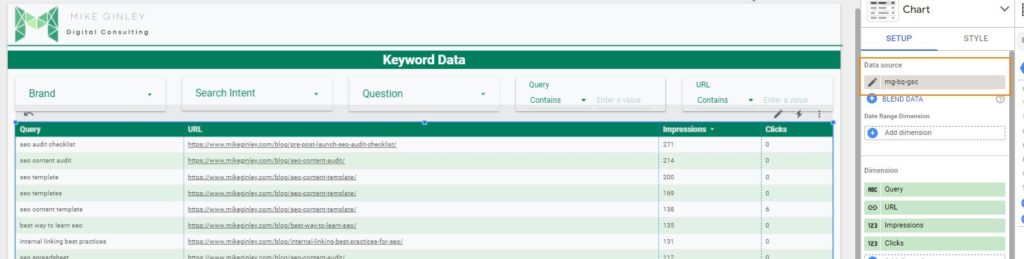

Step 4: Now Onto Google Looker Studio

This is where you can do a lot to simplify reporting!

Here is a copy of my Google Looker Studio Search Console Reporting Template. Use the Bulk BQ Test, the other sheet is from my old process to bulk export Google Search Console Keywords.

- In the top right 3 dots, click and make a copy.

- From there you can add your data sources, examples below.

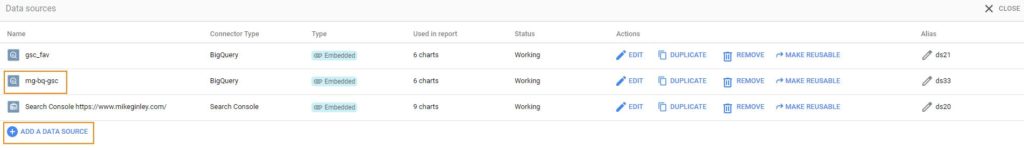

- Under Resources -> Manage Added Data Sources.

- Add New Data Source.

- Select BigQuery.

- Choose Your Project, Dataset & Scheduled Table that you set up above.

- Save data.

- Ensure that the Looker Table is using this new data source.

- You should now see your data from BigQuery populating.

- If the metrics from below have changed you may have to manually update them similar to what I have below. I haven’t figured out why changing the data source sometimes changes the table metrics.

- Customize this table any way you want it!

- Google Looker Studio Filters

- Combine tables in BigQuery to report on

Step 5: What Do I Do With Bulk Google Search Console Data?

Now you have access to a ton of great data related to people discovering your website!

- Are they clicking on the queries you think they should be?

- Are you not seeing terms with impressions & clicks that are related to your core services/products?

- Are there URLs ranking for the same keywords & not getting clicks?

There is so much you can do with this data. when you find queries that aren’t performing it may be time to refresh them. Use this fool-proof SEO Content Template to ensure you are writing for the users!

Check out the resources below to learn more about how to use BigQuery & SQL. You can also check out all my other articles to see how you can better utilize Looker Studio & SEO Templates!